Abstract

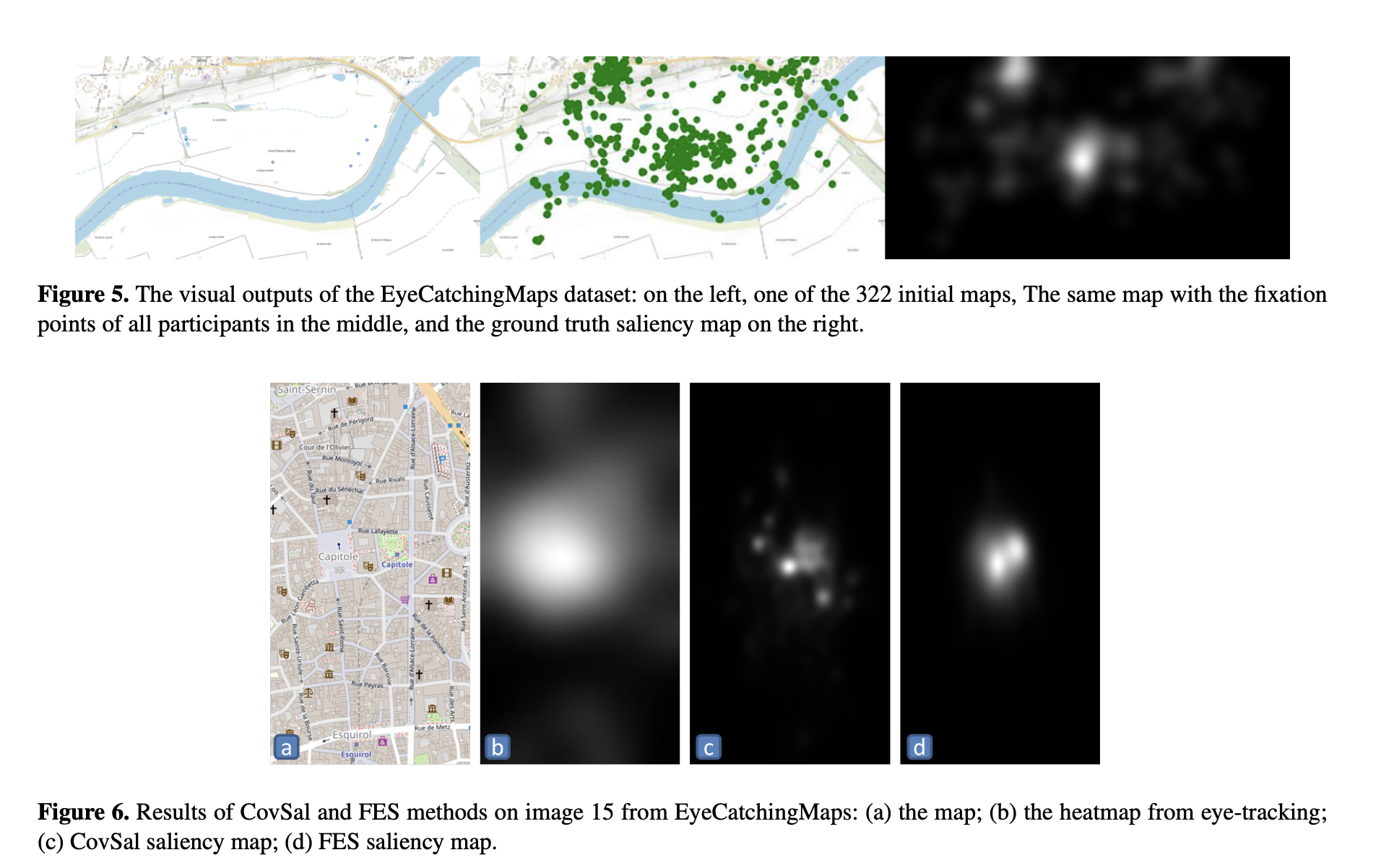

Saliency models try to predict the gaze behaviour of people in the first seconds of their observation of an image. To assess how much these models can perform to predict saliency in maps, we lack a ground truth to compare to. This paper proposes EyeCatchingMaps, an open dataset that can be used to benchmark saliency models for maps. The dataset has been obtained by recording the gaze of participants looking at different maps for 3 seconds with an eye-tracker. The use of EyeCatchingMaps is demonstrated by comparing two different saliency models from the literature to the real saliency maps derived from people’s gaze.

Full citation (dataset) with DOI

Wenclik, L., & Touya, G. (2024). EyeCatchingMaps, a Dataset to Assess Saliency Models on Maps [Data set]. Zenodo. https://doi.org/10.5281/zenodo.10619513

Related articles

Wenclik, L. and Touya, G. (2024) EyeCatchingMaps, a Dataset to Assess Saliency Models on Maps, AGILE GIScience Ser., 5, 51, https://doi.org/10.5194/agile-giss-5-51-2024

https://agile-giss.copernicus.org/articles/5/51/2024/